Two significant reports have laid out which jobs are in greatest danger of being taken over by AI tools like ChatGPT. Soon after, Silicon Valley’s biggest names sounded the alarm over AI’s destructive potential. Is AI really going to ruin our lives? Or are we just crap at predicting the future?

Researched by: Rachel John

The tale of two reports

Over the past week, two key papers have laid out big-picture predictions about the impact of Artificial Intelligence on the future of employment. One was released by Goldman Sachs. The other paper was put out by researchers at Open AI (of ChatGPT fame) and University of Pennsylvania. Both reach roughly the same conclusions.

The scale: According to Goldman Sachs, around 300 million jobs will be affected by some sort of automation—due to generative AI. This is any tool that can generate content—be it text, images, software code—in response to textual prompts. And that is “indistinguishable from human output.” The report predicts about a quarter of all jobs performed in the world could be automated by AI.

OTOH, OpenAI research focuses entirely on the US job market:

“Our findings indicate that approximately 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of GPTs, while around 19% of workers may see at least 50% of their tasks impacted,” Eloundou and her colleagues estimate. “The influence spans all wage levels, with higher-income jobs potentially facing greater exposure.”

Who loses out? Unlike previous technological advancements, AI will likely affect better educated workers with college degrees. Machine learning will not replace most jobs that require manual labour. According to Goldman Sachs, only 6% of jobs in construction will be affected by AI. OTOH, the greatest impact will be on administrative (46%) and legal (44%) professions.

The OpenAI paper reached a similar conclusion:

Information-processing roles—including public relations specialists, court reporters and blockchain engineers—are highly exposed, they found. The jobs that will be least affected by the technology include short-order cooks, motorcycle mechanics and oil-and-gas roustabouts.

In general, it found that state-of-the-art GPTs excel in higher order tasks such as translation, classification, creative writing and generating computer code. Think poets, PR experts, web designers, mathematicians and financial analysts.

But, but, but: OpenAI researchers use careful language—claiming jobs will be significantly “influenced” or “augmented” by generative AI—but not replaced entirely. The reasoning:

Exposure predicts nothing in terms of what will change and how fast it will change. Human beings reject change that compromises their interests and the process of implementing new technologies is often fraught with negotiation, resistance, “terror and hope.”

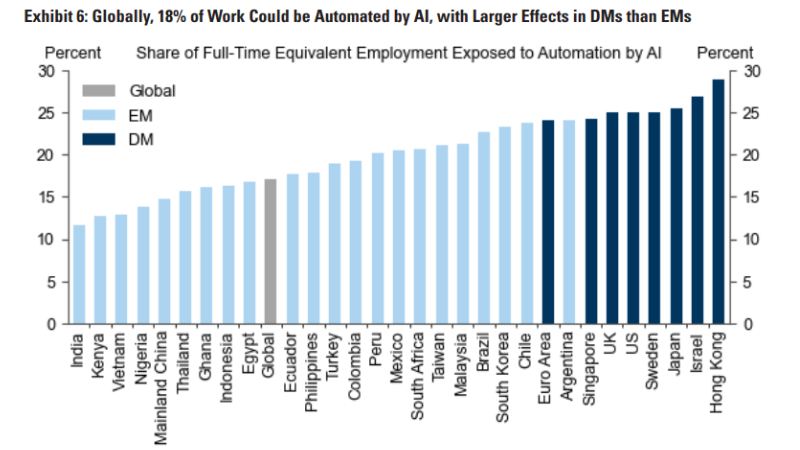

Point to note: According to Goldman Sachs, this is also why the impact will be greater on advanced economies—with roughly two-thirds of jobs in the US and Europe are exposed to some degree of AI automation. Since many of the jobs in the developing world are manual, only a fifth of the jobs in major economies will be affected. As you can see, India is on the lowest end of that graph:

The good news: The OpenAI folks say that generative AI cannot fully replace any worker because “considering each job as a bundle of tasks, it would be rare to find any occupation for which AI tools could do nearly all of the work.” But the paper is extremely vague—and jargon-heavy—about potential benefits or drawbacks.

Goldman Sachs is more forthcoming about the upside—claiming that the AI disruption will bring greater employment in the long run:

Technological innovation that initially displaces workers drives employment growth over a long horizon…60% of workers today are employed in occupations that did not exist in 1940, implying that over 85% of employment growth over the last 80 years is explained by the technology-driven creation of new positions.

According to the company, the resulting “productivity boom” will raise the annual global gross domestic product by 7% over a 10-year period.

Point to note: It remains to be seen who will benefit the most from this boom. The AI industry is already creating its version of “sweatshops” in developing countries. While Silicon Valley companies are shelling out $300K+ salaries for ‘prompt engineers’, they are paying a pittance to ‘data annotators’ in poorer countries—who have the tedious job of labelling content for machines.

For example, to make sure that ChatGPT doesn’t traumatise nice people in the West with abusive content, OpenAI uses workers in Kenya—paid less than $2 per hour—to identify hate speech and images of violence and sexual abuse.

Is this Mary Shelley’s AI?

Mary Shelley’s ‘Frankenstein’ perfectly encapsulates the human fear of mad scientists—who willy-nilly create a monster in their quest for ‘progress’. Every great technological leap—be it nuclear power or the internet—has inspired similar doomsday fears. While no one is predicting ChatGPT will bring apocalypse, AI taps into a deeply held fear of being replaced by machines—which will unleash misery on their creators.

Ringing the alarm bell: A letter signed by the biggest names in tech—including Elon Musk, Steve Wozniak and AI startup founder Emad Mostaque—urgently called for a halt on AI development. They say companies should stop training AI machines more powerful than ChatGPT until they can develop protocols to ensure they are safe “beyond a reasonable doubt.” The letter darkly warns:

Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one—not even their creators—can understand, predict, or reliably control.

Yup, it’s the Frankenstein fable all over again.

Prudent or paranoid? The letter was released by Future of Life (founded by Musk) that lays out two scenarios on its website—both of which sound oddly like Hollywood plots. Scenario one is a destructive machine that runs amok. Example: AI-controlled weapons:

Moreover, an AI arms race could inadvertently lead to an AI war that also results in mass casualties. To avoid being thwarted by the enemy, these weapons would be designed to be extremely difficult to simply "turn off," so humans could plausibly lose control of such a situation.

Scenario two involves a machine that uses a destructive method to achieve a beneficial goal:

If you ask an obedient intelligent car to take you to the airport as fast as possible, it might get you there chased by helicopters and covered in vomit, doing not what you wanted but literally what you asked for. If a superintelligent system is tasked with an ambitious geoengineering project, it might wreak havoc with our ecosystem as a side effect, and view human attempts to stop it as a threat to be met.

But these sci-fi sounding claims may point to a bigger problem. Humans are mostly awful at predicting the future—more so in matters of technology.

The terrible history of tech predictions

Yes, AI will indeed transform the world, but here’s why you should take all this prognosticating with a giant pinch of salt.

Too much, too fast: We always overestimate how quickly technology will advance. Example:

In 1954 a Georgetown-IBM team predicted that language translation programs would be perfected in three to five years. In 1965 Herbert Simon said that “machines will be capable, within twenty years, of doing any work a man can do.” In 1970 Marvin Minsky told Life magazine, “In from three to eight years we will have a machine with the general intelligence of an average human being.”

The problem of hubris: In fact, the earliest AI research—launched at a Dartmouth conference in 1956—claimed that “every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it.” More than 53 years later, Israeli neuroscientist Henry Markram claimed that within a decade his research group would “reverse-engineer the human brain by using a supercomputer to simulate the brain’s 86 billion neurons and 100 trillion synapses.” Well, it’s 2023 and we still don’t understand how our brain works—leave alone try and ‘reverse engineer’ it.

The more you know…: the less likely you are to be accurate about the future of technology. In 1943, IBM chairman Thomas Watson said that there was a “a world market for maybe five computers.” 3Com founder Robert Metcalfe predicted the internet “will soon go spectacularly supernova and in 1996 catastrophically collapse"—and literally had to eat his words by blending his column into a smoothie. When it comes to tech predictions, expertise can often lead to tunnel vision.

The human factor: Grand tech predictions tend to discount human interference. As Slate notes:

In 2014, Ray Kurzweil predicted that by 2029, computers will have human-level intelligence and will have all of the intellectual and emotional capabilities of humans, including “the ability to tell a joke, to be funny, to be romantic, to be loving, to be sexy.” As we move closer to 2029, Kurzweil talks more about 2045.

More importantly, there is little indication that most humans want computers to be romantic, loving or sexy—except for niche fetishists who buy sex robots today. Technological change is driven by what we want or need—not simply by what is possible.

Another example: Consider the fear that we will soon have ‘designer babies’ thanks to gene-editing technology. How will that work in a global culture obsessed with the next big upgrade?

Even if Crispr technology makes that possible on some level, the perfect baby probably wouldn’t grow up into a perfect adult, said [Technology writer Edward] Tenner. We’re not consistent in what we consider perfect — “you can imagine a wave of [engineered] babies … and by the time they grow up, they’d be obsolete,” he explained.

Ok, that makes us giggle—but you get the point.

Quote to note: Back in 1843—when the first proto-computer was invented—Ada Lovelace observed:

In considering any new subject, there is frequently a tendency, first, to overrate what we find to be already interesting or remarkable; and, secondly, by a sort of natural reaction, to undervalue the true state of the case, when we do discover that our notions have surpassed those that were really tenable.

This became the premise of what is today called Amara’s Law: “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.”

An amusing point to note: Artists have often proved as accurate as technologists in predicting the future. For example, this 1930s ‘prophecy’ of FaceTime:

Better yet: James Bond receiving a text on his smartwatch in ‘The Spy Who Loved Me’ circa 1977:

Better yet: James Bond receiving a text on his smartwatch in ‘The Spy Who Loved Me’ circa 1977:

The bottomline: The greatest danger posed by an AI tool is the tech company that creates it. Big Tech has already given us vast social media platforms that spread disinformation, misuse user data and cause mental illness amongst teens. All of it is the frenzied need to ‘move fast, break things’—which could very well have been the motto of Dr Frankenstein—except he was motivated by hubris not share price.

Reading list

- Read the original Goldman Sachs report and the OpenAI/UPenn paper—which has not yet been peer reviewed.

- Forbes is excellent on the history of tech predictions—see especially the bit on female secretaries.

- Bloomberg News explains why they often turn out to be wrong.

- Slate argues this is especially true when it comes to AI.

- Since we didn’t really get into the upside of AI, check out New York Times’ experiment with Bard—and this piece on AI as a teacher’s assistant.

- Forbes has more on how the tech can revolutionise medicine.

- Washington Post and TIME offer a balanced look—and also underline the problem with Silicon Valley culture

- Still don’t really get the ChatGPT thing? Our Big Story offers an easy guide.

souk picks

souk picks