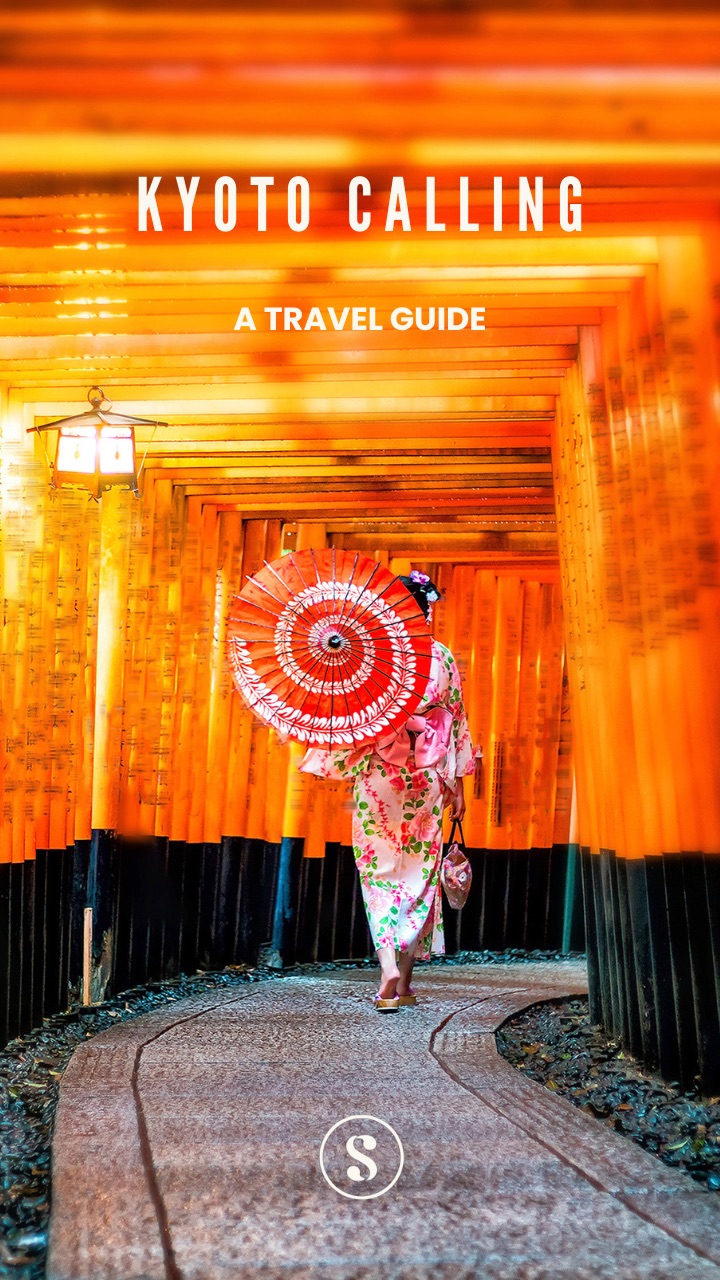

Sign up for Advisory! It’s free and so is our exclusive

welcome

aboard travel guide to Kyoto.

Our weekly goodie bag includes:

What you won’t get: Spam or AI slop!

Already a user ? Login

Privacy Policy. You can unsubscribe any time.Tell us a bit more about yourself to complete your profile.

Thank you for joining our community.

A consortium of 17 news publications have come together to roll out a flood of stories on Facebook. These are based on tens of thousands of internal documents shared by whistleblower Frances Haugen—a former product manager. The so-called Facebook Papers also offer a number of significant revelations about India. The big picture: the platform’s algorithm is rigged to promote hate and disinformation—and the company’s reluctance to crack down on groups associated with the RSS, Bajrang Dal etc. ensures that anti-Muslim content spreads unchecked.

This isn’t the first big scoop on Facebook’s activities in India. Two previous Wall Street Journal investigations revealed the following:

Please note: WSJ is behind a paywall but we explained each of these investigations here and here.

#1 A hate-promoting algorithm: In February 2019, a Facebook employee created a dummy account as a person from Kerala. The aim was to answer a key question: what would a new user see on their feeds if they followed all the recommendations generated by the platform’s algorithm to join groups, watch videos and explore pages? The experiment was conducted for three weeks—during which the Pulwama attacks occurred in Kashmir. Here’s what happened next:

“[T]he employee… said they were ‘shocked’ by the content flooding the news feed which ‘has become a near constant barrage of polarizing nationalist content, misinformation, and violence and gore.’

Seemingly benign and innocuous groups recommended by Facebook quickly morphed into something else altogether, where hate speech, unverified rumors and viral content ran rampant. The recommended groups were inundated with fake news, anti-Pakistan rhetoric and Islamophobic content. Much of the content was extremely graphic… ‘Following this test user’s News Feed, I’ve seen more images of dead people in the past three weeks than I’ve seen in my entire life total,’ the researcher wrote.”

#2 ‘Gateway groups’: A similar experiment with a test user was conducted in the United States—and produced almost identical results. More importantly, a related internal report found that the algorithm repeatedly pushed users toward extremist groups:

"The body of research consistently found Facebook pushed some users into 'rabbit holes,' increasingly narrow echo chambers where violent conspiracy theories thrived. People radicalized through these rabbit holes make up a small slice of total users, but at Facebook’s scale, that can mean millions of individuals."

A different report concluded: “Group joins can be an important signal and pathway for people going towards harmful and disruptive communities. Disrupting this path can prevent further harm.”

Why this matters in India: Many of the groups joined by the test user in India had tens of thousands of users. And an earlier 2019 report found Indian Facebook users tended to join large groups, with the country’s median group size at 140,000 members.

#3 The protected groups: Earlier this year, an internal report found that much of the violent anti-Muslim material was circulated by RSS-affiliated groups and users. But it also noted that Facebook hasn’t flagged the RSS as dangerous or recommended its removal “given political sensitivities.”

Another report called out the Bajrang Dal for using WhatsApp to “organize and incite violence”—and also noted that the group is linked to the BJP. Employees considered designating the Bajrang Dal a dangerous group, and listed it under a recommendation: “TAKEDOWN.” However, it is still active on Facebook.

Response to note: When contacted by the Wall Street Journal, the RSS said, “Facebook can approach the RSS anytime”—but noted that it had failed to do so. Bajrang Dal’s response was more blunt and revealing: “If they say we have broken the rules, why haven’t they removed us?”

#4 Lack of attention/resources: One big reason that Facebook has failed to stem the tide of hate is that it simply hasn’t invested enough resources. According to the documents, Facebook saw India as one of the most “at risk countries” in the world and identified both Hindi and Bengali languages as priorities for “automation on violating hostile speech.” And yet most of the anti-Muslim content was “never flagged or actioned” since Facebook lacked “classifiers” (hate-detecting algorithms) and “moderators” in Hindi and Bengali languages.

Big data point to note: Responding to the reporting, Facebook claims that it has since “invested significantly in technology to find hate speech in various languages, including Hindi and Bengali”—which has resulted in “reduced the amount of hate speech that people see by half” in 2021.

But, but, but, as the New York Times notes, 87% of the company’s global budget for time spent on classifying misinformation is allocated to the United States—and only 13% is set aside for the rest of the world. This is despite the fact that India constitutes FB’s biggest market with over 340 million users—and nearly 400 million are on WhatsApp. In comparison, American users make up only 10% of the social network’s daily active users.

One: Facebook’s algorithms that were rolled out in 2018 are designed to maximise engagement—anything that keeps you spending hours on their platform. Quite simply, fear and hate are more powerful hooks than other emotions. And when a product tweak to crack down on hate decreases engagement, the company has chosen to not to implement it.

Two: The algorithms developed in 2019 to detect hateful content—called ‘classifiers’—only work in certain languages. And despite Facebook’s claims, reports from this year show that the controls for Hindi and Bengali are weak and ineffective. And many other Indian languages are entirely missing.

Three: The company’s first priority is to protect its relationships with those who wield power. A 2020 internal document noted that “Facebook routinely makes exceptions for powerful actors when enforcing content policy.” It also quotes a former Facebook chief security officer, who says that this is an even bigger bias outside the United States. In countries like India, “local policy heads are generally pulled from the ruling political party and are rarely drawn from disadvantaged ethnic groups, religious creeds or casts” which “naturally bends decision-making towards the powerful.”

The bottomline: As we’ve seen, Facebook is only too happy to kowtow to the government’s demands for stricter social media rules. But it will continue to optimise hate in India until and unless the government tells it not to.

All the latest reporting is in the Associated Press, New York Times and Wall Street Journal (paywall). NBC News looks at how ‘gateway groups’ function. MIT Technology Review has a very good deep dive into how Facebook algorithms work—and what we learned from whistleblower Frances Haugen. We highly recommend checking out our previous explainers for more context. The first puts Facebook’s actions in context to its close relationship with Modi and the BJP. The second connects the dots with its big investment in Jio Platforms.

Spark some joy! Discover why smart, curious people around the world swear by Splainer!

Sign Up Here!

souk picks

souk picks